Retail is one of the top industries being transformed by AI and Analytics technology. Retail marketers need to involve segmentation, separation, and the profiling of diverse groups of consumers while keeping up with ever-evolving trends in fashion. Category managers need the information to have a detailed understanding of spending patterns, consumer demand, suppliers, and markets to challenge how goods and services are acquired and delivered.

With technology evolution and millennials driving buyer behavior change in the market, the retail industry must offer a cohesive user experience. This can be achieved through an omni-channel strategy that offers both an optimal physical and digital presence for customers at every touch-point.

Omni-channel Strategy Calls for Reliable Data

This results in a strong internal demand for insight, analytics, innovative management and delivery of excellent information. A combination of traditional canned BI, combined with ad-hoc self-service is key. Traditional BI teams spend a lot of time during the delivery of data warehousing and business intelligence on the development and testing of information to ensure accuracy and reliability. However, when the new information delivery process of ETL, star schemes, reports, and dashboards are implemented, support teams don’t spend much time ensuring that data quality is maintained. The impact of bad data includes bad business decisions, missed opportunities, revenue & productivity losses, and increased expenses.

Because of the complexity of the data flows, the quantity of data, and the speed of information creation, retailers face data quality issues caused by data entry and ETL challenges. When using complex calculations in databases or dashboards, incorrect data can lead to blank cells, unexpected zero values or even incorrect calculations, which makes the information less useful and could cause managers to doubt the integrity of information. Not to oversimplify the problem, but if a manager gets a report on budget utilization before budget numbers are processed in a timely matter, the calculation of revenue vs budget will result in an error.

Managing Data Issues- Proactively

BI teams want to be ahead of the curve and get notifications of any data issue before information is delivered to end-users. Since manual checking is not an option, one of the largest retailers designed a Data Quality Assurance (DQA) program that automatically checks dashboards and flash reports before delivered to management.

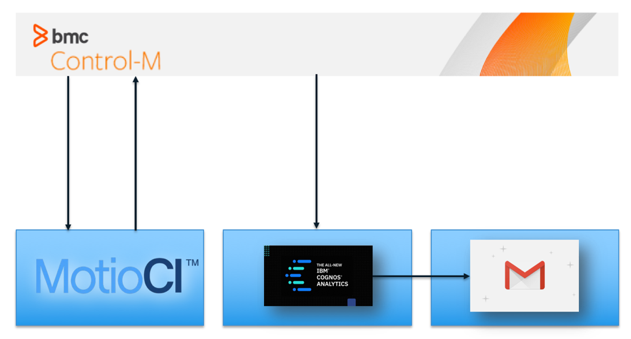

Schedule tools like Control-M or JobScheduler are workflow orchestration tools that are used to kick off Cognos reports and dashboards that will be delivered to business managers. Reports and dashboards are delivered based on certain triggers, such as the completion of an ETL process or on time intervals (every hour). With the new DQA program, the scheduling tool requests MotioCI to test the data prior to delivery. MotioCI is a version control, deployment, and automated testing tool for Cognos Analytics that can test reports for data issues such as blank fields, incorrect calculations or unwanted zero values.

Interaction between the scheduling tool Control-M, MotioCI and Cognos Analytics

Because calculations in dashboards and flash reports can be fairly complex, it’s not feasible to test every single data item. To tackle this issue, the BI team decided to add a validation page to the reports. This validation page lists the critical data that needs to be verified before analytics is delivered to the different Lines of Business. MotioCI only needs to test the validation page. Obviously, the validation page should not be included in the delivery to end-users. It is for internal BICC purposes only. The mechanism to only create this validation page for MotioCI was done by smart prompting: a parameter was controlling the creation of the reports or the creation of the validation page that MotioCI would use to test the report.

Integrating Control-M, MotioCI, & Cognos Analytics

Another complex aspect is the interaction between the scheduling tool and MotioCI. The scheduled job can only request information, it cannot receive information. Therefore, MotioCI would write the status of testing activities in a special table of its database that would be frequently pinged by the scheduler. Examples of status messages would be:

- “Come back later, I’m still busy.”

- “I found an issue.”

- Or when the test passes, “All good, send out the analytic information.”

The last smart design decision was to split the verification process into separate jobs. The first job would only execute the DQA testing of the analytic data. The second job would trigger Cognos to send out the reports. Enterprise-level scheduling and process automation tools are used for different tasks. Daily, it executes many jobs, not only for Cognos and not only for BI. An operations team would continuously monitor jobs. A data issue, identified by MotioCI, could result in a fix. But since time is critical in retail, the team can now decide to send out the reports without running the whole DQA test again.

Delivering the Solution Quickly

Starting a data quality project in the Fall always comes with extremely high time pressure: Black Friday looms on the horizon. Since this is a period of high revenue, most retail companies don’t want to implement IT changes so they can reduce the risk of production disruption. Therefore the team needed to deliver the results in production before this IT freeze. To ensure the multi-time zone team of the customer, Motio and our partner offshore, Quanam, met their deadlines, An agile strategy with daily stand-ups resulted in the project that delivered results quicker than planned. The Data Quality Assurance processes were all implemented within 7 weeks and used only 80% of the allocated budget. The extensive knowledge and a “hands-on” approach that was a driving factor in this project’s success.

Analytics is key for retail managers during the holiday season. Ensuring information is automatically checked and verified, our customer accomplished another step to keep offering its customers high-quality, on-trend products at affordable prices.