Preparing To Move To The Cloud

We are now in the second decade of cloud adoption. As many as 92% of businesses are using cloud computing to some degree. The pandemic has been a recent driver for organizations to adopt cloud technologies. Successfully moving additional data, projects and applications to the cloud depends on preparation, planning and problem anticipation.

- Preparation is about data and the human management of the data and supporting infrastructure.

- Planning is essential. The plan needs to contain specific key elements.

- Problem management is the ability to foresee potential areas of trouble and the ability to navigate them if encountered.

Four Things a Business Must do to be Successful in the Cloud, Plus 7 Gotchas

Your business is going to move to the cloud. Well, let me rephrase that, if your business is going to be successful, it’s going to move to the  cloud – this is, if it is not there already. If you’re already there, you probably wouldn’t be reading this. Your company is forward thinking and intends to take advantage of all of the cloud’s benefits we discussed in another article. As of 2020, 92% of businesses are using the cloud to some extent and 50% of all corporate data is already in the cloud.

cloud – this is, if it is not there already. If you’re already there, you probably wouldn’t be reading this. Your company is forward thinking and intends to take advantage of all of the cloud’s benefits we discussed in another article. As of 2020, 92% of businesses are using the cloud to some extent and 50% of all corporate data is already in the cloud.

The silver lining on the COVID cloud: the pandemic has forced business to look more closely at cloud capabilities to support the new paradigm of a remote workforce. The cloud refers to both large data storage and applications which process that data. One of the main reasons to move to the cloud is to gain a competitive advantage by being flexible and gaining new insights from the boatloads of data.

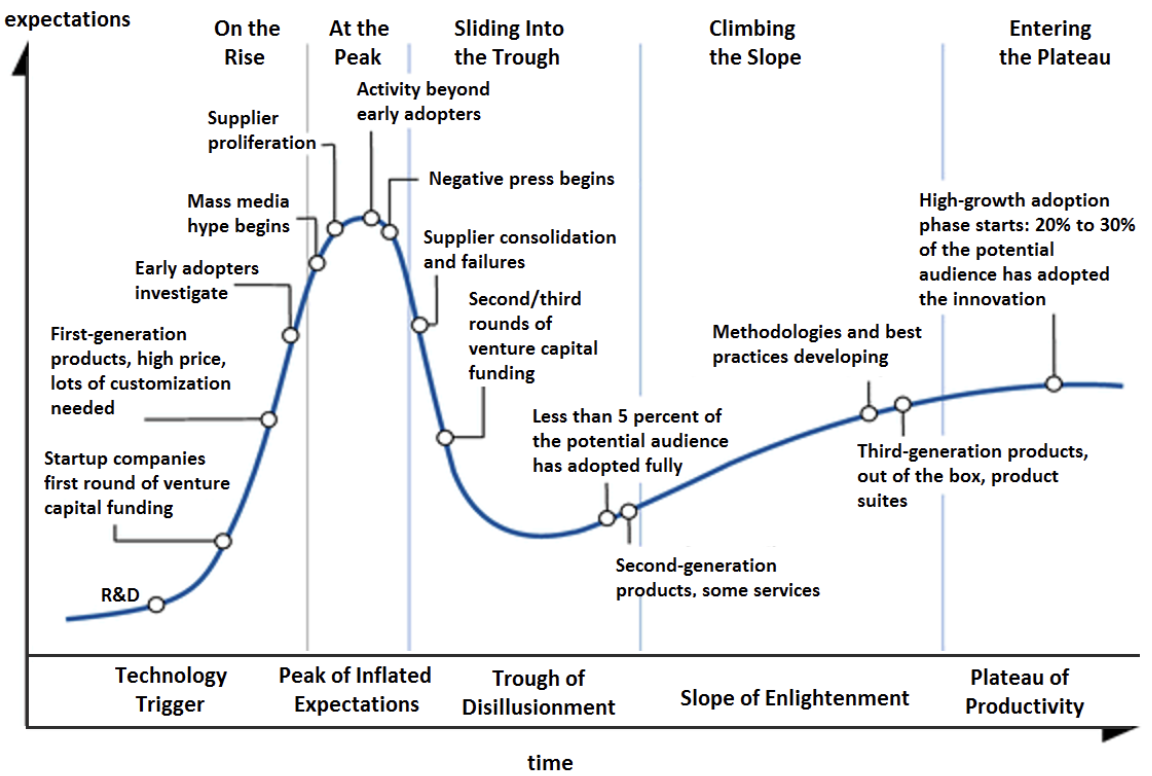

The analyst firm Gartner regularly publishes a report which discusses “technologies and trends that show promise in delivering a high degree of competitive advantage over the next five to 10 years.” Ten years ago, Gartner’s 2012 Hype Cycle for Cloud Computing put Cloud Computing and Public Cloud Storage in the “Trough of Disillusionment” just beyond the “Peak of Inflated Expectations.” Further, Big Data was just entering the “Peak of Inflated Expectations”. All three with an expected plateau in 3 to 5 years. Software as a Service (SaaS) was placed by Gartner in the “Slope of Enlightenment” phase with an expected plateau of 2 to 5 years.

In 2018, six years later, “Cloud Computing” and “Public Cloud Storage” were in the “Slope of Enlightenment” phase with a projected plateau of less than 2 years. “Software as a Service” had reached the plateau. The point is that there was significant adoption of the public cloud in this period.

Today, in 2022, cloud computing is now in its second decade of adoption and is now the default technology for new applications.  As Gartner puts it, “If it is not cloud, it is legacy.” Gartner goes on to say that the impact of cloud computing on an organization is transformational. How then should organizations approach this transformation?

As Gartner puts it, “If it is not cloud, it is legacy.” Gartner goes on to say that the impact of cloud computing on an organization is transformational. How then should organizations approach this transformation?

This chart describes in more detail what it means that a technology is in a particular phase.

How should organizations approach organizational transformation?

In their process of adoption of the cloud, organizations have had to make decisions, establish new policies, create new procedures and address specific challenges. Here’s a list of specific areas you’ll need to resolve to be sure your house is in order:

- Training, re-training or new roles. In adopting the public cloud for data storage or leveraging the applications, you’ve outsourced the support and maintenance of the infrastructure. You still need inhouse expertise to manage the vendor and access the data. Furthermore, you need to know how to leverage the new tools you have available for cognitive analytics and data science.

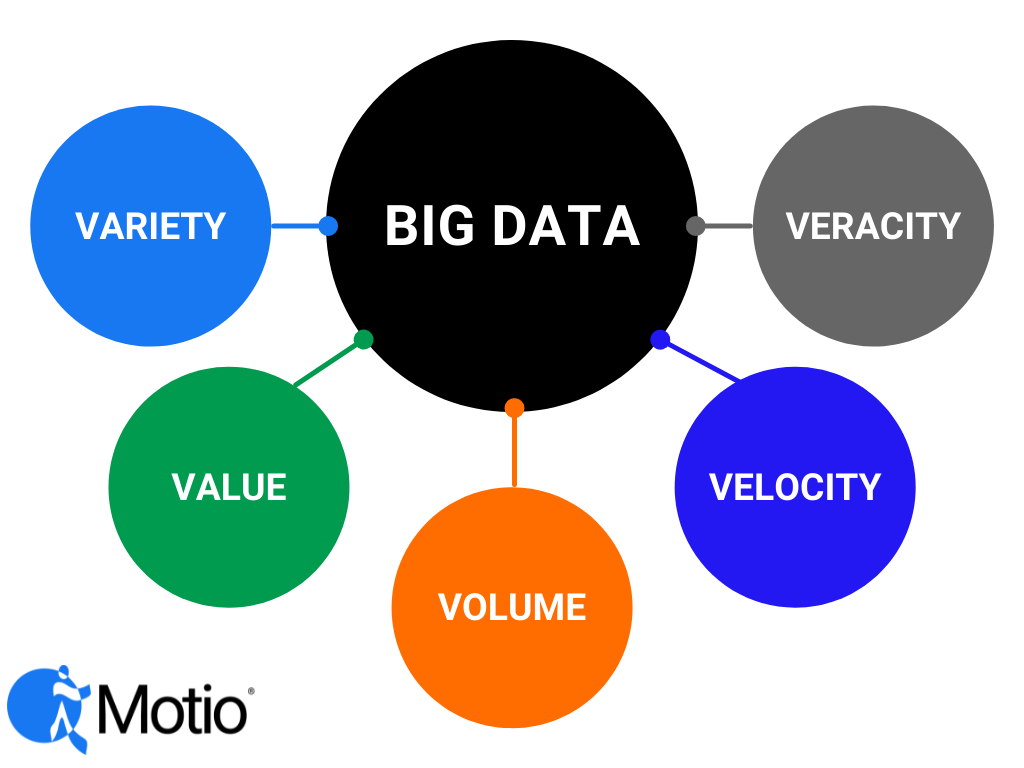

- Data. It’s all about the data. Data is the new currency. We’re talking about Big Data– data which meets at least some of the V’s of the definition. In moving to the cloud, at least some of your data will be in the cloud. If you’re “all-in”, your data will be stored in the cloud and processed in the cloud.

A. Availability of data. Can your existing on-prem applications access the data in the cloud? Is your data where it needs to be for processing? Do you need to budget time in your cloud migration project to move your data to the cloud? How long will that take? Do you need to develop new processes to get your transactional data to the cloud? If you intend to perform AI or machine learning, there must be sufficient training data to meet the desired level of accuracy and precision.

B. Usability of data. Is your data in a format that can be consumed by the people and tools that will be accessing the data? Can you perform a “lift-and-shift” on your data warehouse? Or, can it be optimized for performance?

C. Quality of data. The quality of the data on which your decisions rely can affect the quality of your decisions. Governance, data stewards, data management, perhaps a data curator may play a significant role in the adoption of cognitive analytics in the cloud. Take the time before you migrate the data to the cloud to assess the quality of your data. There’s nothing more frustrating than discovering that you’ve migrated data that you don’t need.

D. Variability and uncertainty in big data. Data may be inconsistent or incomplete. In evaluating your data and how you intend to use it, are there gaps? Now is the time to fix known issues related to enterprise-wide standards on data. Standardize across reporting centers on simple things like time dimensions, geography hierarchies. Identify that single source of truth.

E. Limitations inherent in big data itself. A large number of potential results may require a domain expert to evaluate the results for significance. In other words, if your query returns a lot of records, how will you as a human process it? To filter it further and reduce the number of records, so that it can be consumed by an ordinary non-super human, you will need to know the business behind the data.

3. Supporting the foundation/infrastructure of IT. Consider all the moving parts. It’s likely that not all of your data will be in the cloud. Some may be in the cloud. Some on-premises. Still other data may be in another vendor’s cloud. Do you have a data flow diagram? Are you prepared to move from managing physical hardware to managing vendors who manage physical hardware? Do you understand the limitations of the cloud environment? Have you accounted for the ability to support unstructured data as well as key platform-enabling technologies. Will you still be able to use the same SDK, API, data utilities you’ve been using on-premises? They will likely need to be rewritten. What about your existing ETL to load the data warehouse from transactional systems? ETL scripts will need to be rewritten.

4. Refining roles. Users may need to be retrained on the new applications and how to access data in the cloud. Often a desktop or network application may have the same or similar name as one dedicated to the cloud. It, however, may function differently, or even have a different feature set.

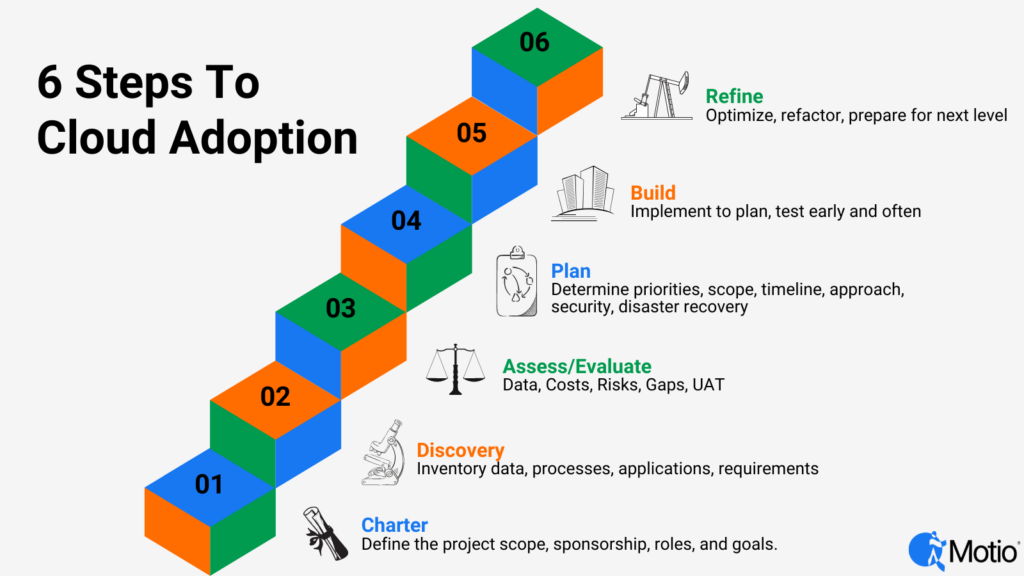

If your organization is serious about moving to the cloud and making the most of analytics, there is no debate that the move can provide significant business and economic value. Practically speaking, to get there from here, you’ll need to:

- Establish a charter.

A. Have you defined the scope of your project?

B. Do you have executive sponsorship?

C. Who – what roles – should be included in the project? Who is the chief architect? What expertise do you need to rely on the cloud vendor for?

D. What is the end goal? By the way, the goal is not “moving to the cloud”. What problem(s) are you attempting to solve?

E. Define your success criteria. How will you know you are successful?

2. Discover. Start at the beginning. Take inventory. Find out what you have. Answer the questions:

A. What data do we have?

B. Where is the data?

C. What business processes need to be supported? What data do those processes need?

D. What tools and applications do we currently use to manipulate the data?

E. What is the size and complexity of the data?

F. What will we have? What applications are available in the cloud from our vendor?

G. How will we connect to the data? What ports will need to be open in the cloud?

H. Are there any regulations or requirements which dictate privacy or security requirements? Are there SLAs with customers which need to be maintained?

I. Do you know how costs will be calculated for cloud usage?

3. Assess and evaluate.

A. What data do we intend to move?

B. Assess costs. Now that you know the scope and volume of data, you’re in a better position to define a budget.

C. Define gaps which exist between what you currently have and the expectations of what you expect to have. What are we missing?

D. Include a test migration to expose what you’ve missed in theory.

E. Include User Acceptance Testing in this phase as well as in the final phase.

F. What challenges can you anticipate so that you can build contingencies into the next phase?

G. What risks have been identified?

4. Plan. Establish a road map.

A. What are the priorities? What comes first? What is the sequence?

B. What can you exclude? How can you reduce the scope?

C. Will there be a time for parallel processing?

D. What is the approach? Partial / phased approach?

E. Have you defined the security approach?

F. Have you defined data backup and disaster recovery plans?

G. What is the communication plan – internal to project, to stakeholders, to end-users?

5. Build. Migrate. Test. Launch.

A. Work the plan. Revise it dynamically based on new information.

B. Build on your historical strengths and successes your legacy IT foundation and begin taking advantage of Big Data and cognitive analytics benefits.

6. Iterate and Refine.

A. When can you retire the servers that are now sitting idle?

B. What refactoring have you discovered that needs to be done?

C. What optimizations can be made to your data in the cloud?

D. What new data applications can you now use in the cloud?

E. What’s the next level? AI, machine learning, advanced analytics?

Gotchas

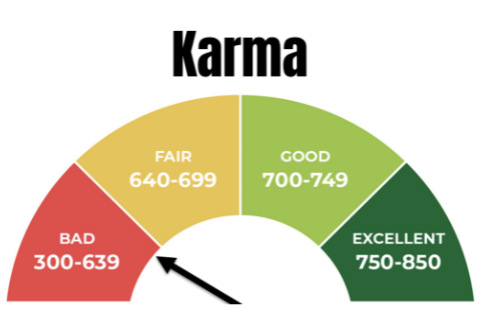

Some sources say that as many as 70% of technology projects are total or partial failures. Apparently, it depends on your definition of  failure. Another source found that 75% thought their project was doomed from the start. That might mean that 5% succeeded in spite of the odds being against them. My experience tells me that there is a significant fraction of technology projects which either never get off the ground or fail to fully realize the promised expectations. There are some common themes that those projects share. As you start planning your migration to the cloud, here are some gotchas to look out for. If you don’t, they’re like bad karma, or a bad credit score – sooner or later, they’ll bite you in the butt.:

failure. Another source found that 75% thought their project was doomed from the start. That might mean that 5% succeeded in spite of the odds being against them. My experience tells me that there is a significant fraction of technology projects which either never get off the ground or fail to fully realize the promised expectations. There are some common themes that those projects share. As you start planning your migration to the cloud, here are some gotchas to look out for. If you don’t, they’re like bad karma, or a bad credit score – sooner or later, they’ll bite you in the butt.:

- Ownership. A single person must own the project from a management perspective. At the same time, all participants must feel invested as stakeholders.

- Cost. Has budget been allocated? Do you know the order of magnitude for the next 12 months as well as an estimate of on-going costs? Are there any potential hidden costs? Have you jettisoned any excess flotsam and jetsam in preparation for the move. You don’t want to migrate data that won’t be used, or isn’t trusted.

- Leadership. Is the project fully sponsored by management? Are expectations and the definition of success realistic? Do the objectives align with corporate vision and strategy?

- Project Management. Are timelines, scope and budget realistic? Are there “forces” demanding shorter delivery deadlines, increased scope and/or lower costs or fewer people? Is there a firm grasp on the requirements? Are they realistic and well-defined?

- Human Resources. Technology is the easy part. It’s the people thing that can be a challenge. Migrating to the cloud will bring changes. People don’t like change. You need to set expectations appropriately. Have sufficient and appropriate staff been dedicated to the initiative? Or, have you tried to carve out time from people who are already too busy with their day job? Are you able to maintain a stable team? Many projects fail because of turnover in key personnel.

- Risks. Have risks been identified and managed successfully?

- Contingency. Have you been able to identify things that are out of your control but which may impact delivery? Consider the effect of a change in leadership. How would a world-wide pandemic affect your ability to meet deadlines and get resources?

The Cloud Computing Hype Cycle in 2022

So where are Cloud Computing, Public Cloud Storage and Software as a Service on Gartner’s emerging technology hype cycle today? They’re not. They are no longer up-and-coming technologies. They’re no longer on the horizon. They’re mainstream, waiting to be adopted. Watch for growth in the following emerging technologies: AI-Augmented Design, Generative AI, Physics-informed AI and Non Fungible Tokens.

The ideas in this article were originally presented as the conclusion to the article “Cognitive Analytics: Building on Your Legacy IT Foundation” presented in TDWI Business Intelligence Journal, Vol 22, No. 4.