Teasers

When did we first see data?

- Mid-twentieth century

- As a successor to the Vulcan, Spock

- 18,000 BC

- Who knows?

As far back as we can go in discovered history we find humans using data. Interestingly, data even precedes written numbers. Some of the earliest examples of storing data is from around 18,000 BC where our ancestors on the African continent used marks on sticks as a form of bookkeeping. Answers 2 and 4 will also be accepted. It was mid-twentieth century, though, when Business Intelligence was first defined as we understand it today. BI didn’t become widespread until nearly the turn of the 21st century.

The benefits of data quality are obvious.

- Trust. Users will better trust the data. “75% of Executives Don’t Trust Their Data”

- Better decisions. You’ll be able to use analytics against the data to make smarter decisions. Data quality is one of the two biggest challenges facing organizations adopting AI. (The other being staff skill sets.)

- Competitive Advantage. The quality of data affects operational efficiency, customer service, marketing and the bottom line – revenue.

- Success. Data quality is linked heavily to business success.

6 Key Elements of Data Quality

If you can’t trust your data, how can you respect its advice?

Today, the quality of data is critical to the validity of decisions businesses make with BI tools, analytics, machine learning, and artificial intelligence. At its simplest, data quality is data which is valid and complete. You may have seen the problems of data quality in the headlines:

- CDC’s COVID-19 Data Improvement – “Over the course of the pandemic, CDC has been improving the timeliness, completeness, and quality of critical data for the response.”

- Garbage in, garbage out; city watchdog finds troubling pattern of unreliable data quality – “A new report from the [Chicago] acting inspector general says “data quality issues” affect the “objectivity, utility and integrity” of the information used to allocate resources, measure employee performance and monitor a host of programs.”

- GAO finds data quality issues during VA’s EHR rollout – “The VA did not ensure the quality of data migrated to its new Cerner EHR system.”

In some ways – even well into the third decade of Business Intelligence – achieving and maintaining the quality of data is even more difficult. Some of the challenges which contribute to the constant struggle of maintaining data quality include:

- Mergers and acquisitions which try to bring together disparate systems, processes, tools and data from multiple entities.

- Internal silos of data without the standards to reconcile the integration of data.

- Cheap storage has made the capture and retention of large amounts of data easier. We capture more data than we can analyze.

- The complexity of data systems has grown. There are more touchpoints between the system of record where data is entered and the point of consumption, whether that be the data warehouse or cloud.

What aspects of data are we talking about? What properties of the data contribute to its quality? There are six elements which contribute to data quality. Each of these are entire disciplines.

- Timeliness

- Data is ready and usable when it is needed.

- The data is available for end-of-month reporting within the first week of the following month, for example.

- Validity

- The data has the correct data type in the database. Text is text, dates are dates and numbers are numbers.

- Values are within expected ranges. For example, while 212 degrees fahrenheit is an actual measurable temperature, it is not a valid value for a human temperature.

- Values have the correct format. 1.000000 does not have the same meaning as 1.

- Consistency

- The data is internally consistent

- There are no duplicates of records

- Integrity

- Relationships between tables are reliable.

- It is not unintentionally changed. Values can be traced to their origins.

- Completeness

- There are no “holes” in the data. All of the elements of a record have values.

- There are no NULL values.

- Accuracy

- Data in the reporting or analytic environment – the data warehouse, whether on-prem or in the cloud – reflects the source systems, or systems or record

- Data is from verifiable sources.

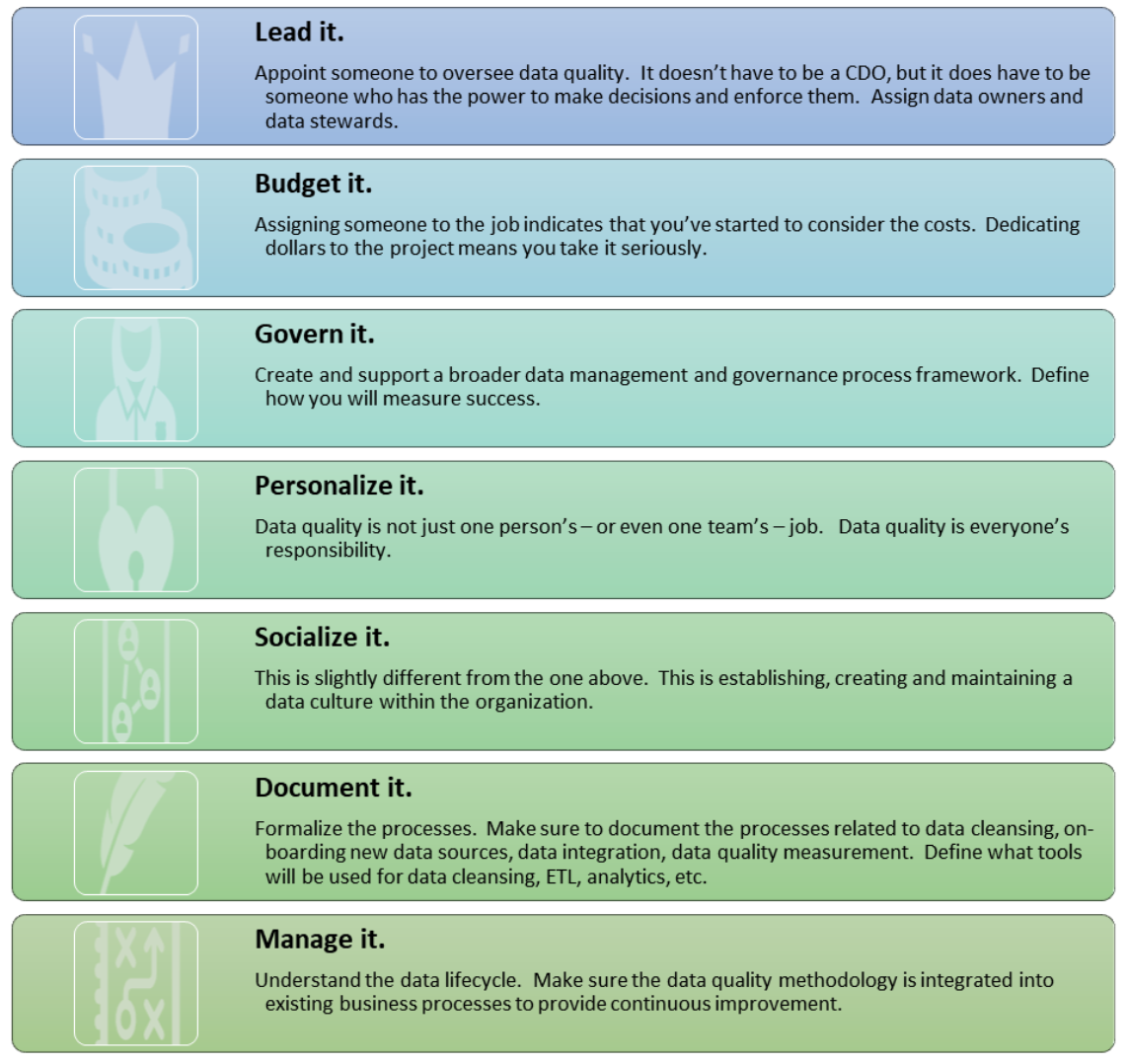

We agree, then, that the challenge of data quality is as old as data itself, the problem is ubiquitous and vital to resolve. So, what do we do about it? Consider your data quality program as a long-term, never-ending project.

The quality of data closely represents how accurately that data represents reality. To be honest, some data is more important than other data. Know what data is critical to solid business decisions and the success of the organization. Start there. Focus on that data.

As Data Quality 101, this article is a Freshman-level introduction to the topic: the history, current events, the challenge, why it’s a problem and a high-level overview of how to address data quality within an organization. Let us know if you’re interested in taking a deeper look into any of these topics in a 200-level or graduate-level article. If so, we’ll dive deeper into the specifics in the coming months.